The Silent Breach: Shadow AI and the $670K "Innovation Tax"

While the industry focused on nation-state hackers in 2025, the most pervasive threat of 2026 is coming from inside the office.

New research from BlackFog and IBM reveals that Shadow AI, the use of unauthorized AI tools by employees, now accounts for 20% of all enterprise breaches.

What’s more alarming? These incidents cost an average of $670,000 more to remediate than traditional data breaches due to the "black box" nature of third-party AI training sets.

The 2026 Shadow AI Landscape

The data suggests that the "Speed Over Security" mindset has reached a breaking point.

Employees aren't malicious; they are just trying to meet 2026's hyper-accelerated productivity demands.

Shadow AI Risk Landscape (2026 Forecast)

| Metric | Percentage |

|---|---|

| Weekly AI Use | 86% |

| C-Suite: Speed > Security | 69% |

| Shadow AI: PII Involved | 65% |

| Global Avg: PII Involved | 53% |

| Unsanctioned AI Use | 49% |

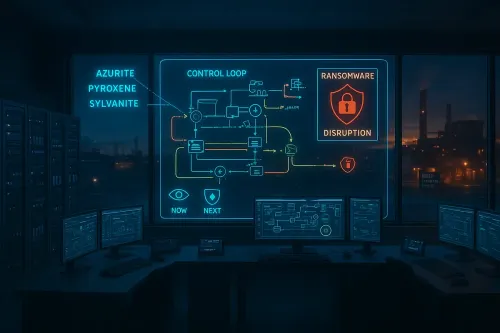

How the Leak Happens [Technical Breakdown]

For the Hacklido community, the threat isn't just "pasting text."

In 2026, the attack surface has evolved into three distinct tiers:

1. The "Prompt-to-Training" Pipeline

Free versions of GenAI models often ingest user prompts into their future training datasets.

When an engineer pastes 2,000 lines of proprietary code to debug, that code effectively becomes public property.

If a competitor later prompts the same model for "best practices in [Specific Proprietary Logic]," the AI may hallucinate or worse, accurately reproduce the leaked logic.

2. Unsanctioned Agentic AI (The "OpenClaw" Crisis)

We are currently seeing a surge in "Agentic Shadow AI."

Tools like the open-source OpenClaw allow users to build autonomous agents.

However, researchers recently discovered 335 malicious "skills" in public marketplaces that install keyloggers on Windows/macOS.

The Hacklido Note: These agents often run with the user's elevated OAuth tokens, meaning a compromised "meeting summarizer" agent can bypass MFA to scrape the entire corporate Slack or Salesforce instance.

3. API Sprawl & Ghost Users

Enterprises now manage an average of 613 APIs, many of which are "Shadow APIs" connected to AI tools.

These create unsupervised pathways for data exfiltration that traditional Firewalls and EDR (Endpoint Detection and Response) cannot see.

Hacklido Pro-Tips :

Banning AI has failed; 2025 proved that employees simply find workarounds.

The 2026 defense strategy focuses on "Secure Enablement":

- LLM Firewalls: Deploying a real-time sanitization layer between the user and the LLM.

- DSPM (Data Security Posture Management): Implementing tools that specifically scan for "AI-processed data".

- The "No-AI-Without-AI" Rule: Using AI-Native Red Teaming to simulate prompt-injection attacks daily.

Stay ahead. Stay dangerous.

Team Hacklido ❤️

Join our Community –

https://t.me/hacklido